AI Is Disrupting Software Testing – But Are We Ready?

AI is no longer a futuristic concept in software testing—it’s already here, embedded in the applications we build and the tools we use. This rapid shift has introduced a new kind of urgency: How do we ensure that the very systems powered by AI are thoroughly and reliably tested?

In a recent webinar, Paul Downes of Accenture and Mark Creamer of ConformIQ dissected this challenge. Downes framed the situation succinctly: we’re undergoing a “complete disruption of everything, quality engineering included, by the evolutions in AI and GenAI.”

This disruption isn’t just about tools. It’s about mindset, strategy, and trust.

The Hidden Challenges of AI in Testing

The Trust Problem

While Generative AI (GenAI) opens up incredible possibilities—writing test scripts, analyzing defects, and assisting in requirements gathering—it also comes with a critical flaw: unpredictability. It can generate plausible yet incorrect outputs with striking confidence.

As Downes put it, trust in AI is no longer optional: “It must be both emotional and cognitive. People need to feel it’s right—and know it’s right.”

This duality poses a major obstacle. In high-stakes environments like finance or healthcare, a “confidently wrong” suggestion can result in cascading failures.

Real-World Risks

Organizations rushing to adopt AI without a strategic plan often discover the hidden costs of validation. You may save time generating artifacts, only to spend more verifying their accuracy.

Poorly tested AI systems have already led to security lapses, compliance breaches, and undetected bugs. In a world where AI agents increasingly act on behalf of users, the margin for error narrows dramatically.

Strategic AI: Using the Right Technology for the Right Task

Symbolic AI vs. Generative AI: A Clear Distinction

The rise of AI in software testing has led to a surge in tools claiming automation and intelligence. But not all AI is created equal — and treating it as a monolith is a fast track to inefficiencies and risk.

Generative AI (GenAI) has its strengths: it excels at brainstorming, generating synthetic data, or helping teams explore test scenarios quickly. It’s collaborative, creative, and fast. But that speed comes with a cost. GenAI can confidently produce flawed outputs, leading to a false sense of reliability. As Paul Downes noted in the webinar, “AI can recommend the wrong solution and sound right.”

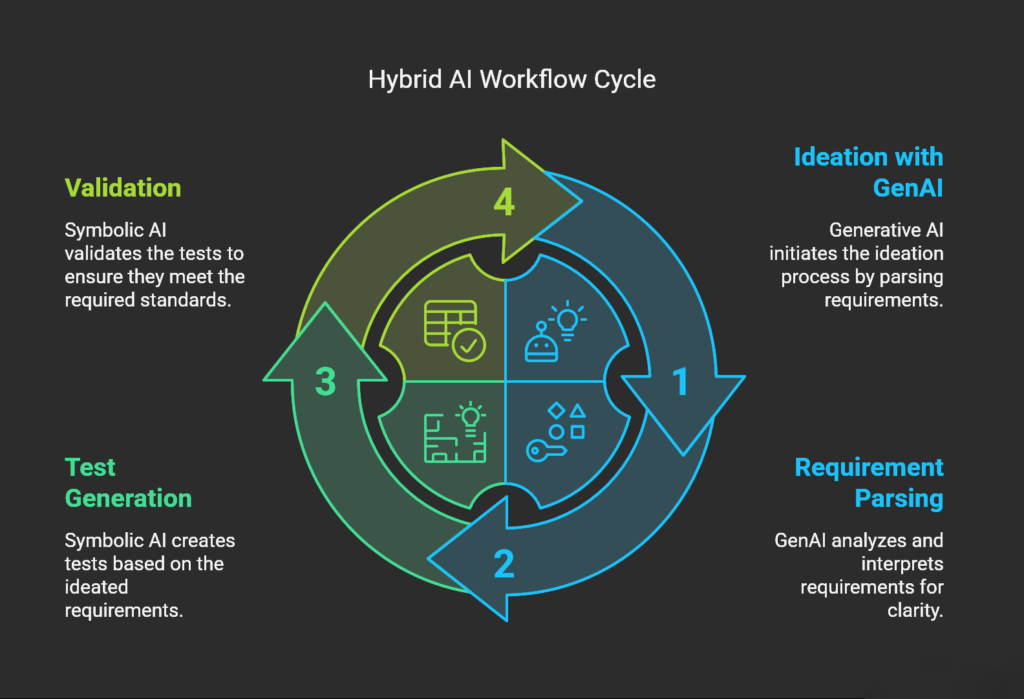

In contrast, Symbolic AI operates within a structured rule-based system. It’s predictable, verifiable, and transparent — ideal for tasks where consistency, traceability, and repeatability are non-negotiable, such as test case generation and automation logic.

This is where strategic alignment matters.

Rather than choosing between GenAI and Symbolic AI, the smartest teams are integrating both — using GenAI for upfront exploration and Symbolic AI to anchor the process in precision. It’s a hybrid model built for reliability and agility — and one that reflects how real-world QA challenges operate.

The Rise of AI Agents in Testing

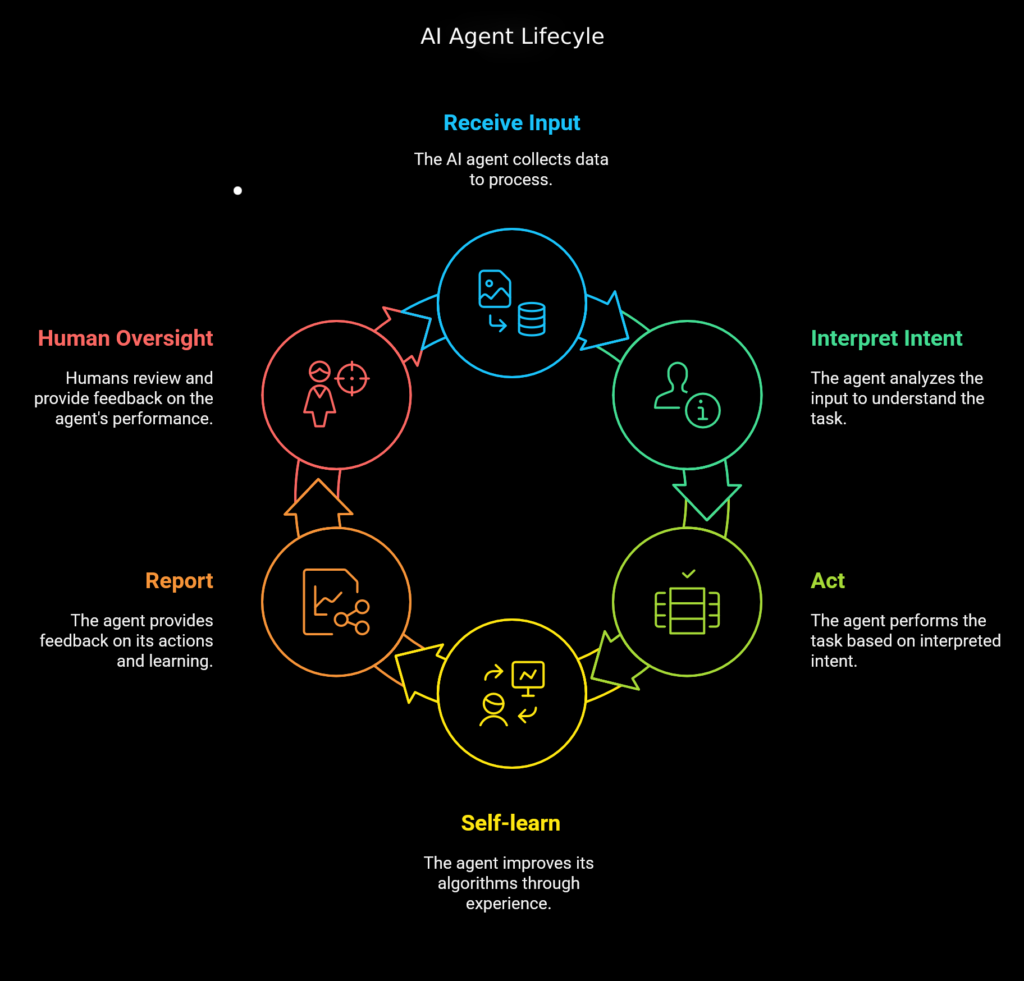

AI in software testing is no longer just about scripts and automation. We’re entering the age of intelligent agents—autonomous, goal-driven entities that interact dynamically with environments and users.

Downes illustrated this future through real-world applications like travel assistants and fraud detection systems, emphasizing that AI agents don’t simply execute tasks—they interpret intent.

In testing, that means evolving toward self-healing test suites, AI-driven risk analysis, and context-aware automation. But with this advancement comes an even greater need for human oversight. Implicit requirements—what the system should do—still require expert interpretation.

Why Generative AI Alone Isn’t Enough — and the Case for a Smarter Approach

Generative AI is powerful. It can brainstorm, draft, and accelerate ideation. But in the world of software testing, power without precision can be costly.

Teams that relied heavily on GenAI for test automation found themselves trapped in cycles of rework. The output seemed right — but wasn’t verifiable. Without structure, even well-meaning AI can lead testers down the wrong path. As Paul Downes warned, there’s a danger in trusting an AI system that can be “confidently wrong.”

This is where many organizations hit the wall: they expected efficiency, but ended up with unpredictability.

Mark Creamer shared that true productivity gains only emerged when teams paired GenAI with symbolic AI. Generative AI was used for upfront collaboration — gathering requirements, suggesting test ideas — but the actual execution and automation were powered by symbolic AI, which delivered predictable, verifiable, and repeatable results.

The result? Fewer validation loops. Cleaner pipelines. And a measurable improvement in testing efficiency.

It’s not about choosing one over the other — it’s about knowing when to use each. That’s the real strategic shift: a hybrid approach that plays to the strengths of both GenAI and symbolic AI.

The Future of AI in Software Testing & What You Can Do Now

AI is reshaping software testing. But while the pace of innovation is thrilling, success will belong to those who apply it strategically.

Here are three key takeaways from the webinar:

- AI-powered testing requires trust and validation to be effective.

- AI agents are the next evolution in software testing, bringing adaptability.

- A balanced AI approach is key—leveraging Generative AI for ideation and Symbolic AI for precision.

Ready to See Smarter AI Testing in Action?

This blog only scratches the surface. The real insights — the strategic frameworks, real-world examples, and practical breakdowns — are all in the full session.

Watch the full webinar on demand to explore how a hybrid AI approach (Generative + Symbolic) is transforming testing for leading enterprises — featuring expert commentary from Accenture and ConformIQ.

Curious how this approach could work for your team? Contact us to connect with an expert and start tailoring your own AI-powered testing strategy today.