The automotive industry is constantly facing the significant challenge of releasing cars with no software defects. It goes without saying that a software fault in the braking system, in the traction control system or in the assisted steering system can be catastrophic, while problems in non safety critical components may “only” annoy the user, yet have a negative effect on the integrity of the brand. The number of recalls and redesigns that we all have been reading in the news illustrates the difficulty of this challenge and as the amount of software in modern cars has kept growing and growing, so has the number of recalls and redesigns over the past decades. The cost of recalling a vehicle can be huge, having a major economic impact on the car manufacturer and/or its component supplier.

Car manufacturers already today spend a great deal of money, time and resources on software testing. The amount of testing conducted today is not expected to decrease. On the contrary, as the amount and complexity of the embedded software in cars keep increasing, the testing challenge also keeps growing. These companies are investing hugely on testing. In looking for testing improvement options, most, if not all of them over the last years, have explored Model Based Testing, as MBT could be an efficient way of addressing their growing testing challenge. MBT would provide the means of improving the quality of testing by, for example, automatically identifying certain corner cases that traditional manual methods are prone to overlook, while at the same time keeping the cost of testing more under control.

However, there have been quite a few disappointments amongst the adopters of MBT technologies. The adopted tools have simply not lived up to the expectations either by producing suboptimal quality or by having the user spend significant amounts of time creating the models and then maintaining them. The problem is that these tools are literally like slot machines; that is, every time you run test generation, you get different results and different coverage. Worse, most of these tools suffer from huge coverage and performance issues (generated tests do not cover the model thoroughly enough) and do not even communicate the coverage or then use some elementary / proprietary metrics for communicating the coverage making it next to impossible to assess the actual quality of your testing efforts. Beyond that, the modeling formalism adopted is so rigid and foreign that it’s very difficult to create the model in the first place, not to mention maintaining it. They deploy no conventions and layers that would help the user in understanding the model.

To hide the simplicity and incompleteness of these approaches that have caused some nasty disappointments, I have seen tool vendors integrate some ludicrous features like “ROI calculator” that, sorry to say, have cracked me up and I have laughed out loud when seeing them!

For a number of months, Berner & Mattner has worked with us at Conformiq to develop a solution that aims to address these automotive testing challenges and current shortcomings. The tool that we have been working on together is called MODICA. It addresses the challenge of systematic test case design providing the means to derive test cases from a model of the anticipated usage of the system by leveraging Conformiq test generation technology in its core. It enables end-to-end test automation over the entire software development lifecycle and provides systematic and deterministic coverage of system behavior with full traceability. MODICA is not a slot machine; on the contrary, MODICA provides systematic and repeatable system coverage and aims to achieve full coverage of all test targets with the smallest possible number of test steps in the test cases.

When compared to Conformiq Designer and Conformiq Creator, MODICA models are not system models; they are tester or usage models. That is, users do not describe the individual test cases using MODICA nor the expected behavior of the system under test, but instead the external environment of the SUT i.e., the anticipated usage of the system. This style of modeling is near to tester’s traditional thinking. After all, MODICA models essentially capture the operations of the tester and require no programming skills.

While the general aspects and advantages of the MODICA approach are domain independent, MODICA builds a specific and custom tailored adaption of this approach for the automotive industry in order to make it more applicable, intuitive to use and faster to deploy. This enables domain experts to easily assist in the model creation as they can readily relate and understand the concepts in the model, a major benefit when compared to alternative approaches.

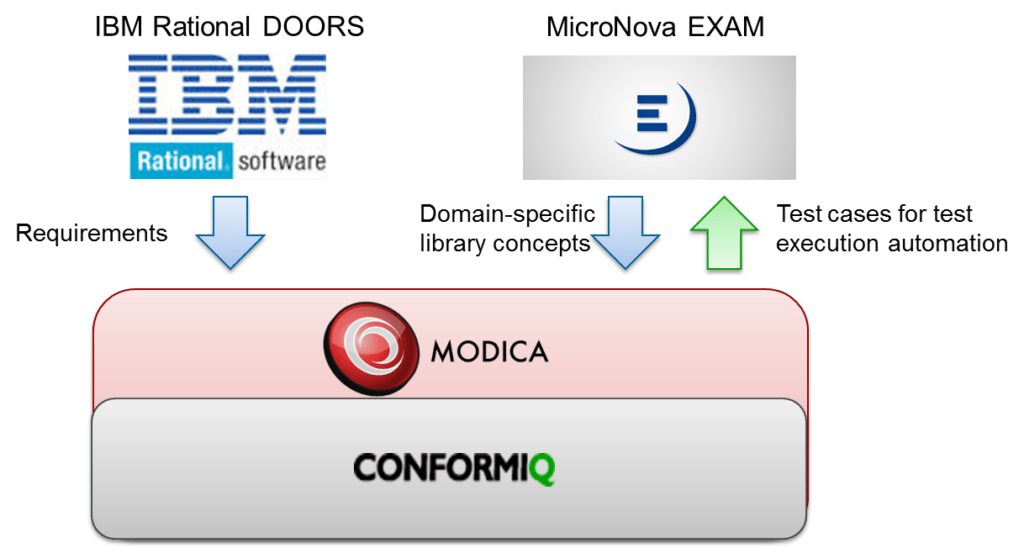

The application logic with MODICA is captured using state charts that are augmented with requirements and domain specific library concepts. Currently MODICA implements out-of-the-box integration with IBM Rational DOORS for requirements handling and MicroNova EXAM. Note that the domain and the vocabulary of EXAM is of the tester, not the system under test, lending most naturally to a usage model based approach. For people working in organizations having already deployed these tools, the learning curve is fast, as MODICA leverages the exact same infrastructure and vocabulary as before. What really changes is that, instead of creating each and every test sequence and test case manually, you create a state chart model.

MODICA’s approach has been successfully used with a light house automotive sector customer. This customer observed increases in their testing efficiency while at the same time also increasing the quality of testing in a fully automated and systematic manner.

I urge you to check out MODICA! https://www.assystem-germany.com/en/products/modica/